Trust Structures for Machine-Conjuring of Reality

People conjure a reality by naming things.

- We see what we understand, and

- “Word Magic” creates understandable reality when a name exists.

o Source: Ogden C.K. Richards I.A. (1927 [2013 reprint]). The Meaning of Meaning: A Study of the Influence of Language upon Thought and of the Science of Symbolism. Martino Fine Books

More recently, data-driven machines can also conjure a reality with prediction & classification.

The vital question becomes:

- Whose conjured reality can we trust in order to make good individual decisions?

Conjuring Reality with Accounting

Stacy Mastrolia, and her co-authors show how accounting conjures economic reality with choices about:

- What items are worth categorizing,

- Naming the categories, and

- What instances are placed in such categories.

o Source: Mastrolia, Stacy A., Zielonka, Piotr, McGoun, Elton G. (2014). Conjuring a reality: The Magic of the Practice of Accounting, Management and Business Administration Central Europe. Vol. 22, No. 3(126): p3-17, Kozminski University

If Magicians use methods to control an audience’s perception of reality away from common sense understanding, then accountants use methods to control an audience’s perception of economic reality that has “… no inherent accounting form”:

- “… a reality is created by every accounting representation.”, and

- these realities conjured by accounting naming conventions are artifacts of opinion with official certifications.

Willful blindness, as well as unintended attentional blindness come from the brain’s inability to multi-task:

- Focusing on one thing precludes focus on another.

Thus, the human conjurer can create realities with methodological devices that include:

- Concealment

- Substitution, and

- Simulation.

o Source: Lamont P. and Wiseman R. (1999). Magic in Theory: An Introduction to the Theoretical and Psychological Elements of Conjuring. Hatfield, Hertforshire, University of Hertfordshire Press.

The “bottom-line” of Mastrolia, et al.’s paper:

- On the corporate balance sheet, one does not see what is absent because its presence is not expected, thus

- We do not spend money, but instead

- We exchange money for things worth naming & tracking.

Conjuring House Values

Buying a house with a specific, fixed rate mortgage gives buyers a lens of reality, that the seller may not share.

Numbering, as well as naming, conjures a reality.

A fixed rate mortgage buys a fixed budgeted payment, say $4,000 per month, before it buys a house, thus creates a distortion field for shared perceptions of economic reality:

- The owner with a such a household budget, and a fixed 3% mortgage rate will see a house worth about a million dollar, and

- The prospective buyers with a similar household budget, but with eligibility for a fixed 5% rate will see a house worth about three-quarter of a million dollars.

The history of an individual’s mortgage rate becomes the context that defines this individual’s economic ground-truth for the value of housing:

- The owners’ income continues to afford the budgeted payment, thus their lens of reality – formed by the 3% mortgage rate – does not receive outcome-based feedback, and continues to default the value of the house around one million dollar.

In the absence of a change in the ground-truth variable (an affordable fixed payment for shelter), the value of the house remains a confounded variable.

Meaningful, outcome-based feedback requires a change in the circumstances of the ground-truth variable:

- Following the loss of a job, the owners’ income can no longer afford the budgeted payment, forcing the sale of the house. The buyers’ lens of reality – formed by the 5% mortgage rate – may eventually become the sellers’ view or reality in order to close a deal on the house around three-quarter of a million dollars.

Further, the ‘cloudiness” between the two lenses of reality grows over time, as the unit-of-account changes due to inflation, thus:

- What form & frequency of updates should individuals use with decision-making models that name & track things?

Conjuring Reality with Machine Learning

Machine learning prediction & classification maps into Mastrolia’s list of conjuring techniques as follows:

- Concealment:

o Black-boxed algorithms with mean-based error metrics,

- Substitution:

o Cherry-picked testing models, and

- Simulation:

o Back-test overfitting.

Continuing this mapping up to Mastrolia’s conclusions, machine-conjuring of reality:

- Does not build models, but instead

- Promotes meaning from models, by

- Processing inputs into outputs worth naming, tracking, and predicting.

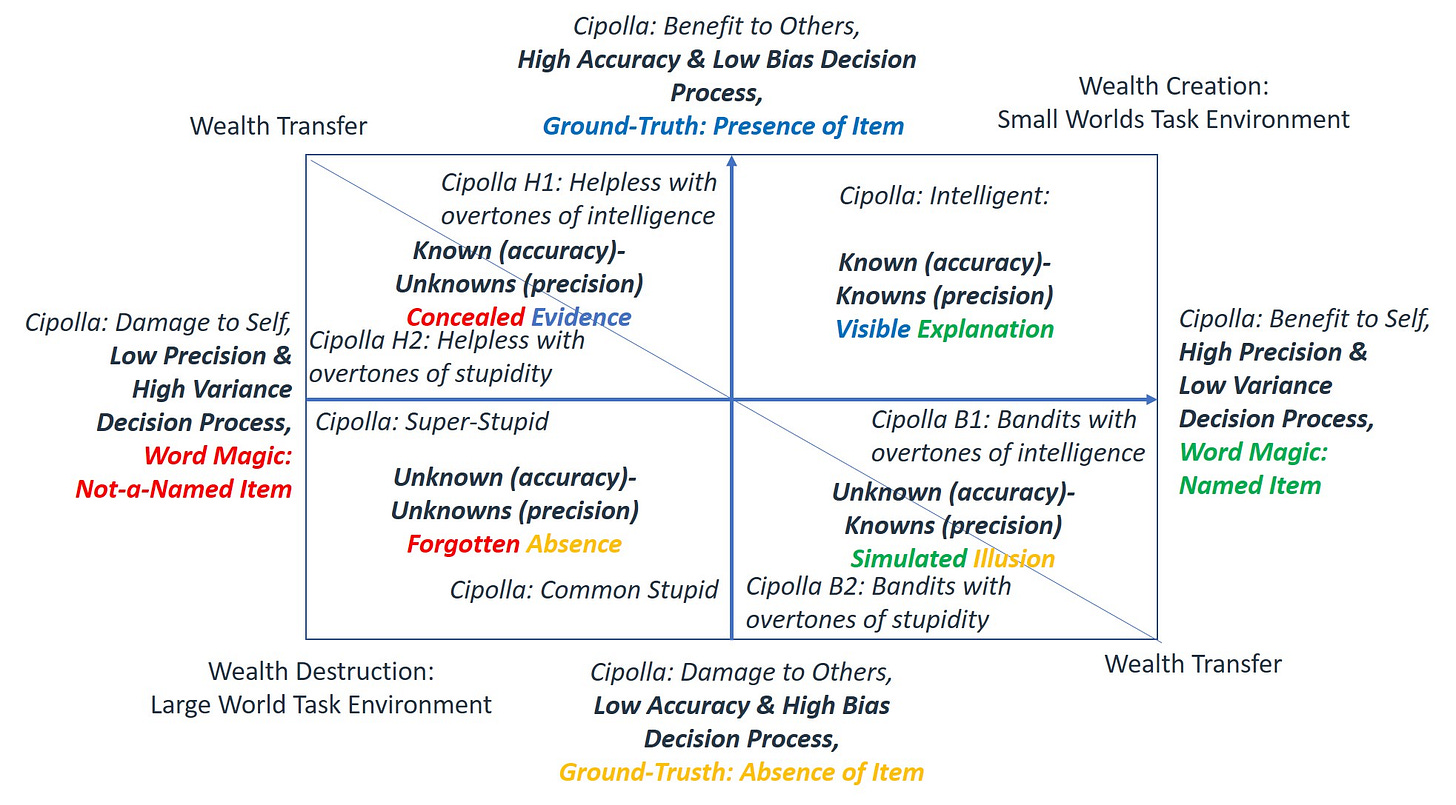

Further, the enhanced version of Carlo Cipolla’s chart, introduced and developed in prior posts, provides a framework to think about the conjuring of reality with machine learning, as shown below:

The compound X-axis shows from left to right:

- Cipolla’s conceptual scoring from “Damage to Self” to “Benefit to Self”.

- Its measurable implementation from “Low Precision & High Variance” decision processes to “High Precision & Low Variance” decision processes, and

- The new “Word Magic” conceptual scoring from “Not-a-Named Item” to “Named Item”.

The compound Y-axis shows from the bottom to the top:

- Cipolla’s conceptual scoring from “Damage to Others” to “Benefit to Others”,

- Its measurable implementation with “Low Accuracy & High Bias” decision processes to “High Accuracy & Low Bias” decision processes, and

- The new “Ground-Truth” conceptual scoring from “Absence of Item” to “Presence of Item”.

The Top-Right quadrant describes Small World problems with:

- “Cipolla Intelligent” agents,

- Known (accuracy) Known (precision) task environments, and

- “Visible Explanations” grounded in theories.

The Top-Left quadrant describes Boundary World problems with:

- “Cipolla Helpless” agents,

- Known (accuracy) Unknown (precision) task environments, and

- “Concealed Evidence” based in Attentional Blindness.

The Bottom-Right quadrant describes Boundary World problems with:

- “Cipolla Bandits” agents,

- Unknown (accuracy) Known (precision) task environments, and

- “Simulated Illusions” based in Willful Blindness.

Finally, the Bottom-Left quadrant describes Large World problems with:

- “Cipolla Stupid” agents,

- Unknown (accuracy) Unknown (precision) task environments, and

- “Forgotten Absence” based in confusing “the Shadow for the Prey”.

The Rose-Colored Lenses of Ensemble Averages

Ergodicity Economics shows that mathematical-conjuring of reality with ensemble averages creates an upward bias that does not match the fate of the typical individual, because such averages include the “winners-take-all” in the population:

- Ensemble averages conjure a “rosier” lens of reality, than faced by the typical individual.

The consequences of such group-focused, “evidence-based” recommendations become worse as an individual’s experience, and the matching task environment move from the Top-Right quadrant to the Bottom-Left quadrant of the chart.

Markets as Trust Structures

Machine-conjuring of reality, based on ensemble averages, suggests at least two types of trust structures to validate their safe & effective use for individual decision-making:

- Explainable processes & outputs based on explicit theories, and

- Theory-free processes & outputs that remain opaque to explanations.

Explainable processes & outputs can where applicable:

- adjust group-relevant, ensemble average recommendations, to

- individual-focused, time average recommendations.

The opacities of theory-free processes & outputs move the requirement for transparency, explanation, and understanding from model specifications to the interaction between:

- The promoter of the meaning of the model, and

- The decision-maker that must accept, or reject, what the model names & tracks as a valid sensory/conceptual perception of reality.

Closing this post with two questions:

- If model developers, and promoters of the meaning of models cannot become fiduciaries, what trust structures can make machine-conjuring of reality safe & effective for individual decision-making, and

- If transaction-based trust structures prove to be a practical solution, how frequently must outcome-based feedback happen to justify a specific threshold level of conditional trust?

These, and related questions, make Peter Cotton’s clearinghouse for micro-predictions, and matching algorithmic murmurations, worth investigating as a trust structure for machine-conjuring of reality.

See prior introductory post:

To be continued…

“CTRI by Francois Gadenne” connects the dots of life-enhancing practices for the next generation, free of controlling algorithms, based on the lifetime experience of a retirement age entrepreneur, and as the co-founder of CTRI continuously updated with insights from Wealth, Health, and Statistics research performed on behalf of large companies.